My Server Setup Part 5: Eliminating the Downtime

In this series of posts I am describing the infrastructure that hosts all of my websites and development projects.

- Part 1: Hosting and Configuration

- Part 2: Nomad Configuration

- Part 3: Nomad Jobs

- Part 4: Continuous Delivery

- Part 5: Eliminating the Downtime

Summary

- In the Outstanding Issues section of part 4 I noted that the update mechanism results in a few seconds of downtime for a site when it’s updated. This is clearly unacceptable.

- The resolution for this was to separate out the web server from the content.

- Docker images are no longer built containing the site content.

- Instead a sidecar service clones and periodically pulls the repo into a directory on disk shared by the sidecar and the web server containers.

- Downtime has been almost completely eliminated (miniscule window per file updated).

How Nomad enables this solution

When a Docker-based Nomad job is started a number of directories are created and auto-mounted into the containers. One of these is shared across all tasks in a group, known as the alloc directory. This directory is what enabled this solution to work.

Web server configuration

The setup still uses statigo as the web server. Since the last post a number of improvements have been made, specifically around metrics and logging, but fundamentally it’s still the small web server it was last time I discussed it.

The difference now is that the web server container is used without any changes - the stock server runs for each site hosted on Nomad. You can see this in the config section of the webserver task in the Nomad job:

config {

image = "stut/statigo:v7"

ports = ["http"]

args = [

"--root-dir", "${NOMAD_ALLOC_DIR}/www",

]

}

The root directory is being set to the www folder in the ALLOC folder. The ALLOC folder is created by Nomad when it creates the allocation, which is a group of tasks that must run together on the same node. Essentially this folder is shared across all tasks in the group.

In this case the group consists of two tasks: statigo and syncer. The statigo task is simply the web server pointed at that shared directory. The syncer task is where the “magic” happens.

Syncer configuration

The syncer task is running a new component, oddly called syncer. This component is responible for cloning a git repository and then periodically pulling for changes. this mechanism is what will now update the content of the static webite with next to zero downtime.

The syncer docker container can be found on and used directly from DockerHub, and the source is available on GitHub. The syncer Nomad task is configured as follows, broken up with some explanation.

task "syncer" {

driver = "docker"

lifecycle {

hook = "prestart"

sidecar = true

}

The lifecycle settings are important as that is what will ensure the syncer starts before the other task(s) in the group, specifically ensuring that the initial clone is done before the statigo task is started. If this is not the case then the root directory for statigo will not exist when it starts, so it will fail.

config {

image = "stut/syncer:v7"

ports = ["http_syncer"]

}

Nothing too cmplicated here. As with statigo we get the version for syncer from a terraform local variable.

{% raw %} template {

data = <<EOF

SYNCER_SOURCE="git@github.com:stut/blog.git"

SYNCER_DEST="{{ env "NOMAD_ALLOC_DIR" }}/www"

SYNCER_GIT_BRANCH="deploy"

SYNCER_SSH_KEY_FILENAME="{{ env "NOMAD_SECRETS_DIR" }}/ssh_key"

SYNCER_UPDATE_INTERVAL="1h"

EOF

destination = "local/env"

env = true

change_mode = "restart"

}{% endraw %}

This sets the environment variables which configure the syncer instance. They should be self-explanatory. One thing to note in this configuration is that the branch, deploy, only contains the output from running Jekyll on the repository. This is a GitHub action covered later in this article. In your configuration the site to serve may be in a sub-directory of the repository in which case you’ll need to adjust the root directory passed to statigo.

The change_mode is largely unnecessary since none of the variables used will change unless the task is already being restarted, but I’ve put it there just in case I ever move these variables into Consul or Vault.

template {

data = <<EOF

[INSERT SSH PRIVATE KEY HERE, IDEALLY FROM A SECRETS MANAGEMENT SYSTEM SUCH AS VAULT]

EOF

destination = "secrets/ssh_key"

change_mode = "restart"

}

This is the private key that will be used when cloning the git repository. If the repository does not require this (i.e. it’s a public repo) is can be omitted, but don’t forget to remove the SSH options from the environment template above.

resources {

cpu = 4

memory = 10

}

logs {

max_files = 1

max_file_size = 10

}

Resource requirements are pretty low for this task, but be sure to check the logs when you run it for the first time. The memory in particular is determined primarily by the amount required to perform the initial clone. For a large repository this may need to be increased.

service {

port = "http_syncer"

meta {

metrics_port = "${NOMAD_HOST_PORT_http_syncer}"

}

check {

type = "http"

port = "http_syncer"

path = "/health"

interval = "1m"

timeout = "2s"

}

}

Finally we configure a service and associated health check. We add a meta variable which will allow prometheus within the cluster to automatically pick up the new metrics source, and the HTTP server will respond successfully to requests for /health so that can be used as a health check.

Group startup process

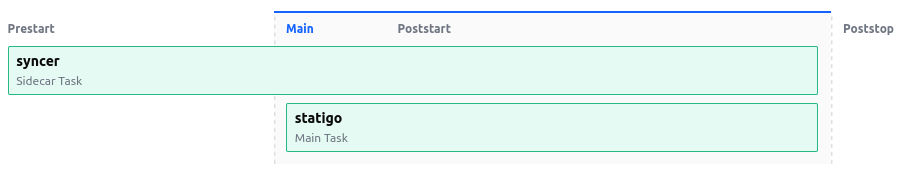

The Nomad user interface displays the group lifecycle in in a pretty straightforward manner:

The prestart tasks start first, and as soon as they’re running and healthy only then will the main tasks start. In this instance there are no poststart or poststop tasks but you can imagine when and why those would be started.

For the syncer/statigo group this translates to the syncer starting and cloning the repo into the shared directory, then the statigo task is started with its root pointed at that directory.

Both the syncer and statigo have a health check endpoint so if the syncer can’t perform the inital clone the statigo process won’t start, and if the statigo task can’t access the shared directory for some reason it will fail to start.

Updates

The syncer process will periodically pull on the clone which will update the website content. Due to the mechanism git uses to pull on a repo this results in milliseconds of “downtime” per file in the repo. This is what we want!

What happenes if…?

…the repo clone already exists in the shared directory?

The syncer will see the directory, will confirm that it’s a valid clone of the right branch of the right repo, and will perform a pull instead of a clone. If it’s not a clone of the right branch of the right repo it will crash out with an error causing the Nomad deployment to fail.

…the repo can’t be cloned for some reason?

The syncer will exit with an error causing the Nomad deployment to fail.

…there are changes in the clone for some reason?

There is a configuration option specifying whether a hard reset should be done if changes are found. If the hard reset fails or is not enabled the syncer process will exit.

Experience so far

This arrangement has now been running my static sites for a couple of weeks at the time of writing and so far it’s been working perfectly. If you can see this post that’s a testament to that!

There are certainly some parts of this architecture that could be improved and/or made more resilient but for now it works well enough for my purposes.

Tell me what you think

Contact me on Twitter (@stut) or by email.

stut.dev

stut.dev